Visual odometry

Evaluated classical stereo vision and deep learning-based methods for visual odometry on KITTI dataset, analyzing their efficacy in calculating depth maps and tracking motion.

Stereo Vision and Visual Odometry Project with KITTI Dataset

Group Members

Table of Contents

Project Description & Goals

Description

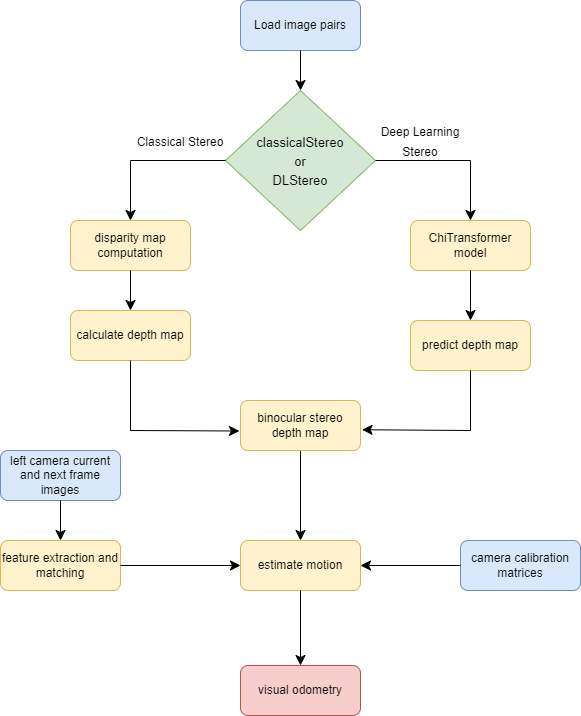

Reconstructing 3D information from images has many applications in robotics and autonomous vehicles. For example, it is crucial for autonomous vehicles to know their position in 3D space. Uncovering such localization information can be achieved through visual odometry, in which the pose of the camera is tracked as it moves through an environment. Visual odometry is often accomplished through stereo vision for depth estimation. Stereo vision can be used to determine the 3D coordinates of features in stereo pairs taken over time. Then, for each subsequent pair, the transformation that best describes the change in transformations is computed, thereby allowing to track the camera through the environment. In this project, we aim to understand stereo vision, and subsequently understand visual odometry, following two approaches classical stereo vision and deep learning based stereo vision.

Goals

Preliminaries:

- Understanding the KITTI datasets: Both team members will collaborate to familiarize themselves with the KITTI stereo and visual odometry datasets.

- Understanding basic mathematics and algorithms for stereo and visual odometry: Both team members will acquire a strong theoretical foundation in 3D vision techniques.

Implement traditional stereo vision:

- Implement traditional stereo vision: Both team members will work together to implement traditional stereo vision techniques using tutorials and resources.

- Test on KITTI stereo and visual odometry datasets: The implemented traditional stereo vision will be tested on the KITTI datasets.

- Implement visual odometry: Both team members will work on implementing visual odometry using tutorials.

Implement deep learning for stereo vision:

- Explore and run the deep learning model on the stereo dataset: Harris will lead this effort, exploring and running deep learning models on the stereo dataset.

- Explore and run the deep learning model on the odometry dataset: Saharsh will lead this part of the project, exploring and running deep learning models on the odometry dataset.

- Replace traditional stereo with deep learning in visual odometry and compare performance: Both team members will collaborate to replace traditional stereo with deep learning in the visual odometry pipeline and evaluate their respective performances using labeled sequences from the KITTI dataset.

Member Roles

- Harris Nisar:

- Explore and run the deep learning model on the stereo dataset.

- Saharsh Sandeep Barve:

- Explore and run the deep learning model on the odometry dataset.

Both team members will collaborate on other aspects of the project, including understanding the datasets, basic mathematics, implementing traditional stereo vision, implementing visual odometry, and comparing the impact of the two stereo vision approaches for visual odometry.

Resources

Data:

- KITTI Stereo Dataset: Contains 200 training scenes and 200 test scenes with four color images per scene, saved in lossless PNG format. Ground truth data is available for evaluation.

- KITTI Visual Odometry Dataset: Consists of 22 stereo sequences in lossless PNG format, with ground truth trajectories available for 11 training sequences (00-10).

Implementation Platform:

- Language: Python

- Libraries: OpenCV, PIL, Numpy, PyTorch

Resources and Tutorials:

- Stereo Vision 1

- Visual Odometry 1

- Visual Odometry 2

- Efficient Deep Learning for Stereo Matching

- Deep Learning for Depth Estimation:

Computational Resources:

The project requires substantial computational resources, and the team has access to suitable hardware, including an RTX-3070 GPU, for both model training and inference.

Project Results

Project result video:

Raw Images

Left Camera Image

Right Camera Image

Disparity Map SGBM from Classical Stereo Vision

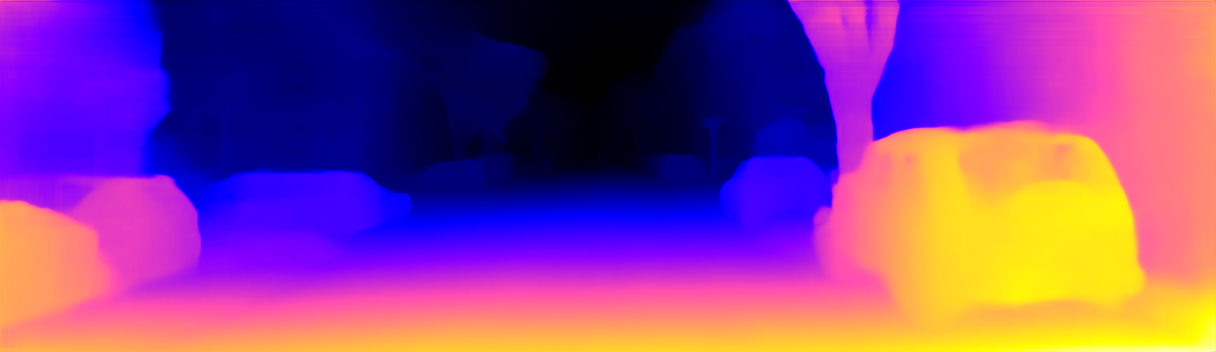

Depth from Deep Learning Stereo Vision

Odometry Results

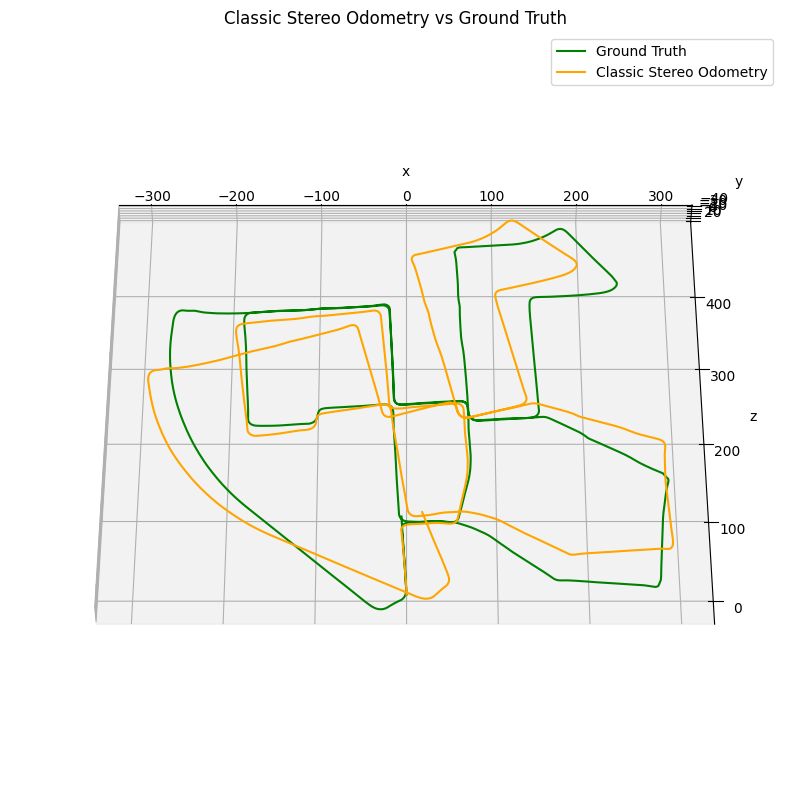

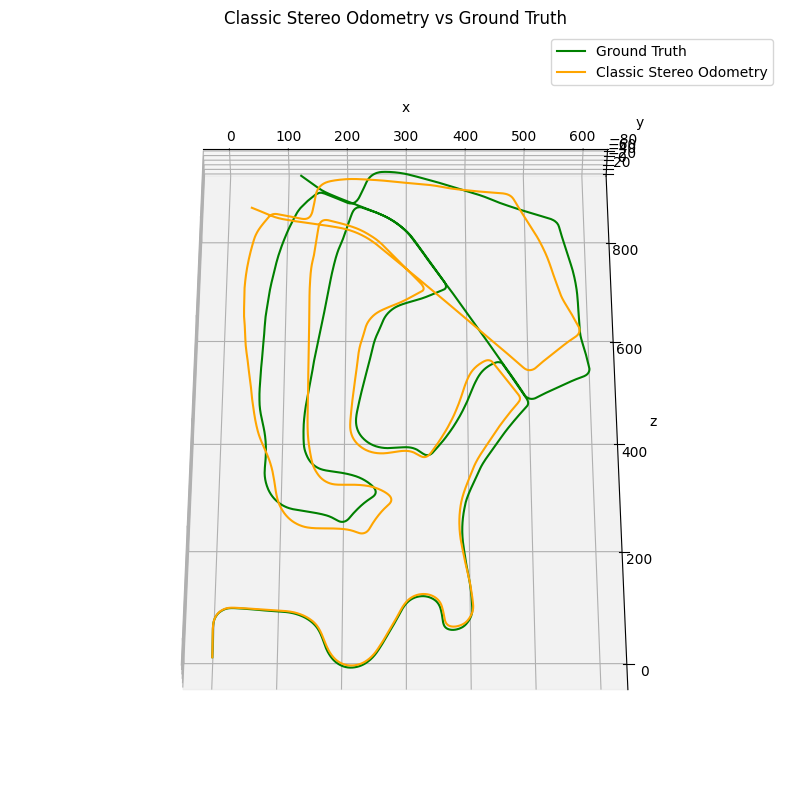

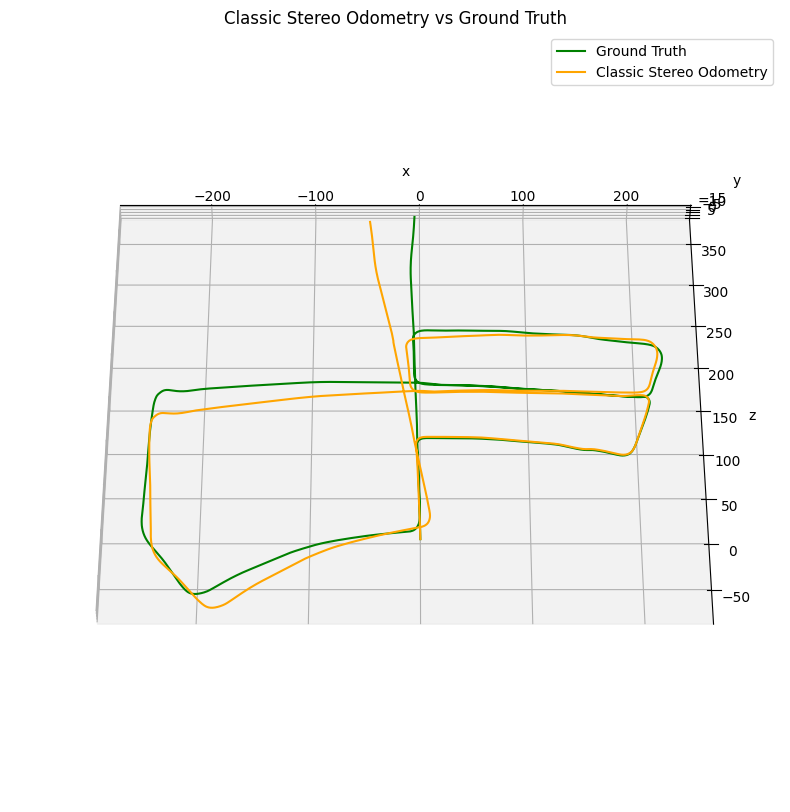

Classical Stereo Vision

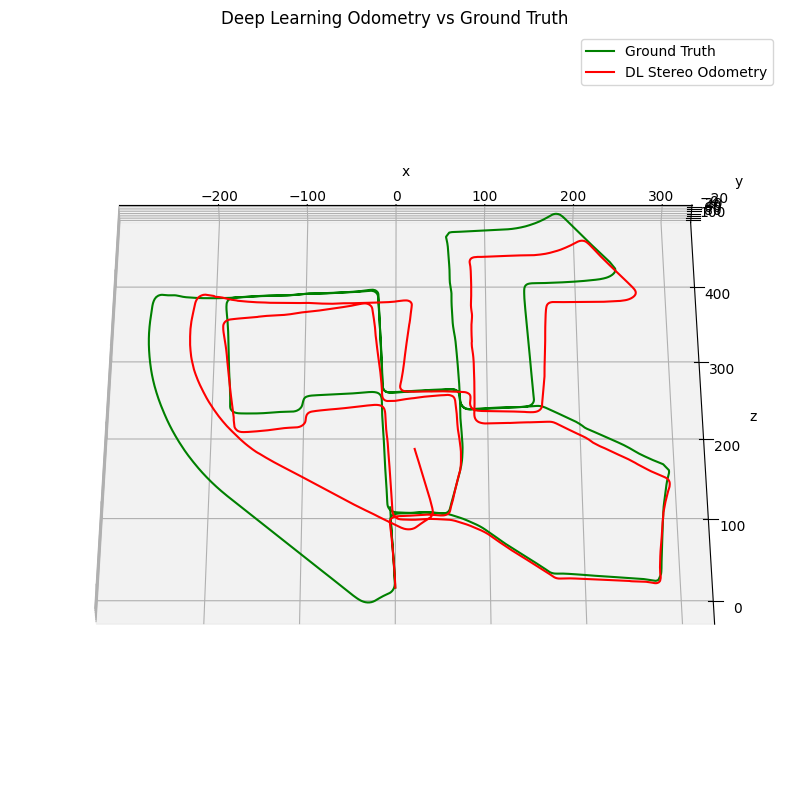

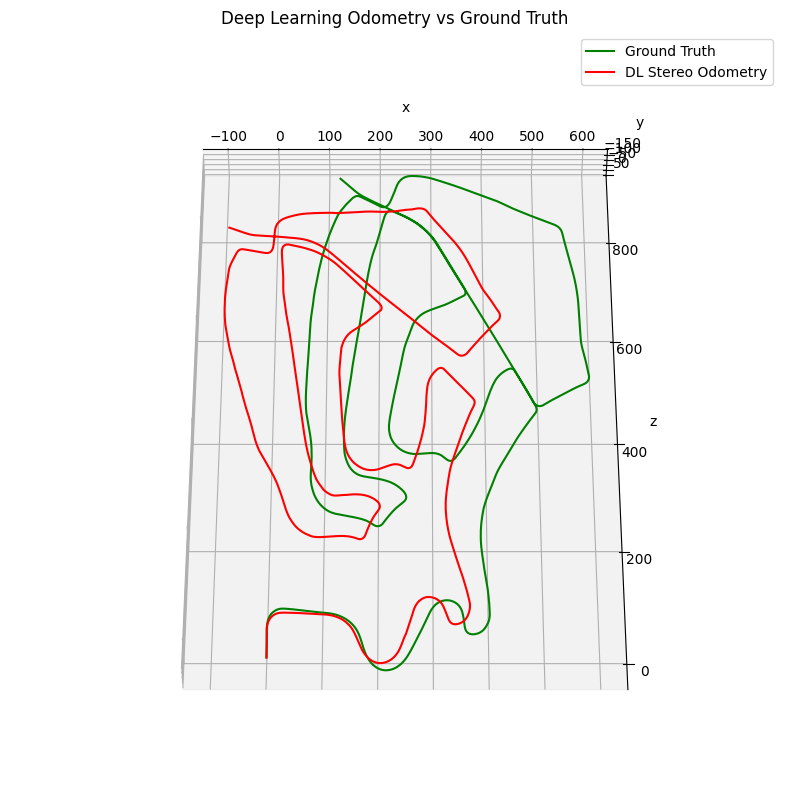

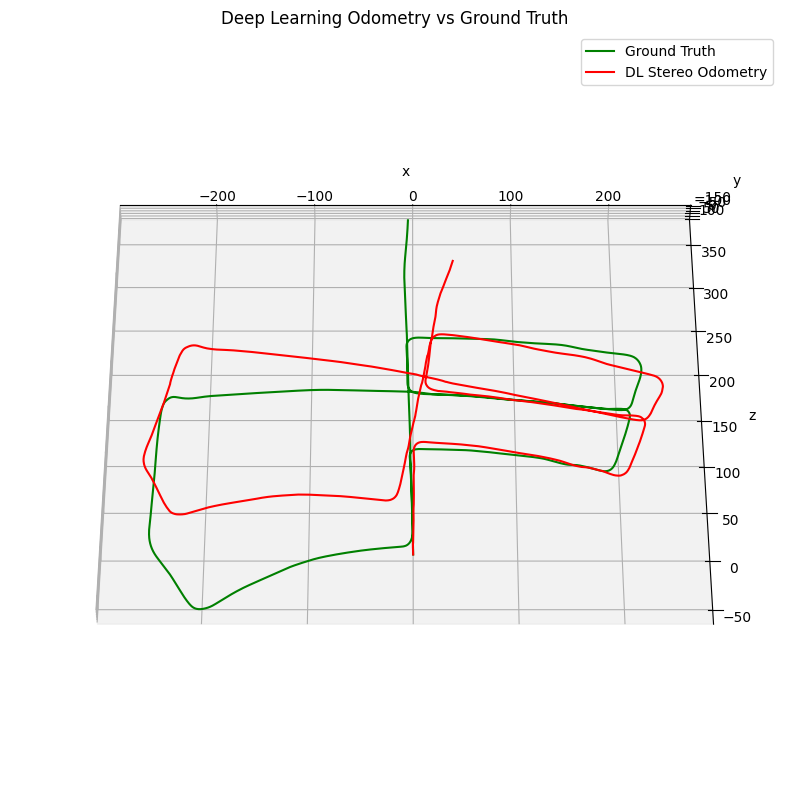

Deep Learning Stereo Vision

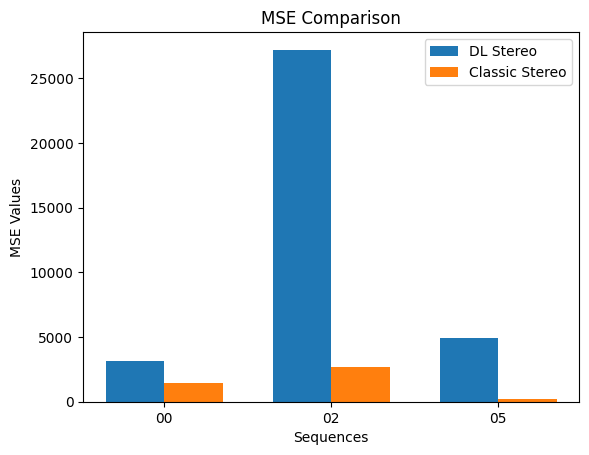

Mean Squared Error (MSE) Error

The above images showcase the results obtained from classical stereo vision, deep learning stereo vision, and the raw images used in the visual odometry pipeline. The odometry results for different sequences are presented for both classical and deep learning stereo vision. Additionally, the Mean Squared Error (MSE) error in depth estimation is visualized to evaluate the performance of the stereo vision methods.

For a more detailed analysis and discussion of the results, please refer to the corresponding sections in the report.

Background

This project is significant for the team as it aligns with their work on a real-time medical instrument tracking system, which involves intelligent simulations and utilizes stereo vision techniques. Although the dataset and scale differ (cars vs. medical tools), the primary objective is to gain theoretical and practical expertise in 3D vision techniques, demystifying these complex algorithms. Additionally, implementing these algorithms in Python using familiar libraries like OpenCV and PyTorch will establish a solid foundation for their research work.

Acknowledgement

We’d also like to acknowledge the work at this repository for making the concepts of visual odometry easy to understand and providing a lot of code that we reused for the pipeline.

References

[1] D. Scaramuzza and F. Fraundorfer, “Visual Odometry [Tutorial],” IEEE Robot. Autom. Mag., vol. 18, no. 4, pp. 80–92, Dec. 2011, doi: 10.1109/MRA.2011.943233.

[2] H. Moravec, “Obstacle Avoidance and Navigation in the Real World by a Seeing Robot Rover.” Sep. 1980. [Online]. Available: Link to PDF

[3] A. Geiger, P. Lenz, and R. Urtasun, “Are we ready for autonomous driving? The KITTI vision benchmark suite,” in 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI: IEEE, Jun. 2012, pp. 3354–3361. doi: 10.1109/CVPR.2012.6248074.

[4] Q. Su and S. Ji, “ChiTransformer:Towards Reliable Stereo from Cues,” 2022, doi: 10.48550/ARXIV.2203.04554.

[5] G. Bradski, “The OpenCV library,” Dr Dobbs J. Softw. Tools, 2000.

[6] D. G. Lowe, “Distinctive Image Features from Scale-Invariant Keypoints,” Int. J. Comput. Vis., vol. 60, no. 2, pp. 91–110, Nov. 2004, doi: 10.1023/B:VISI.0000029664.99615.94.